The code= clause specifies the back-end compilation target and can either be cubin or PTX or both. The arch= clause of the -gencode= command-line option to nvcc specifies the front-end compilation target and must always be a PTX version. Sample nvcc gencode and arch Flags in GCC SM90a or SM_90a, compute_90a – (for PTX ISA version 8.0) – adds acceleration for features like wgmma and setmaxnreg.NVIDIA GeForce RTX 4090, RTX 4080, RTX 6000, Tesla L40 While a binary compiled for 8.0 will run as is on 8.6, it is recommended to compile explicitly for 8.6 to benefit from the increased FP32 throughput.“ “ Devices of compute capability 8.6 have 2x more FP32 operations per cycle per SM than devices of compute capability 8.0.

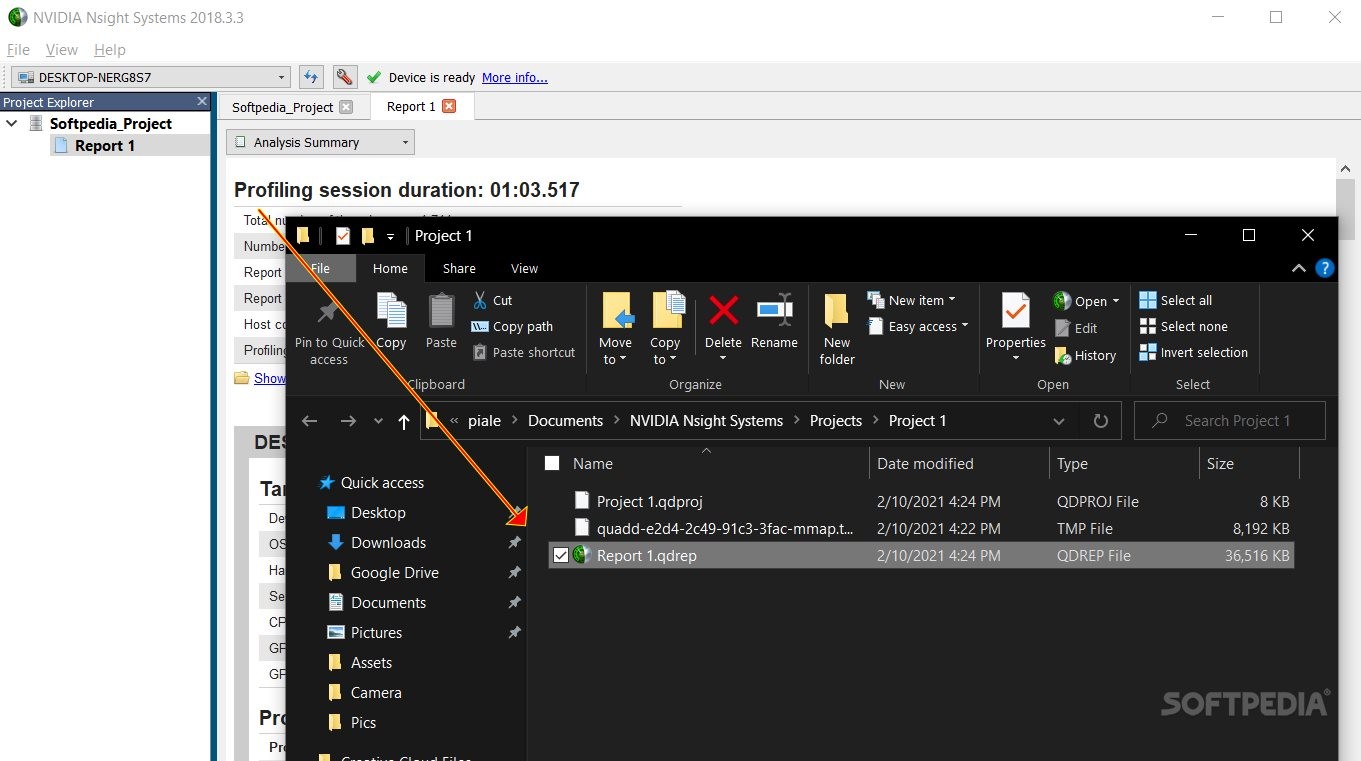

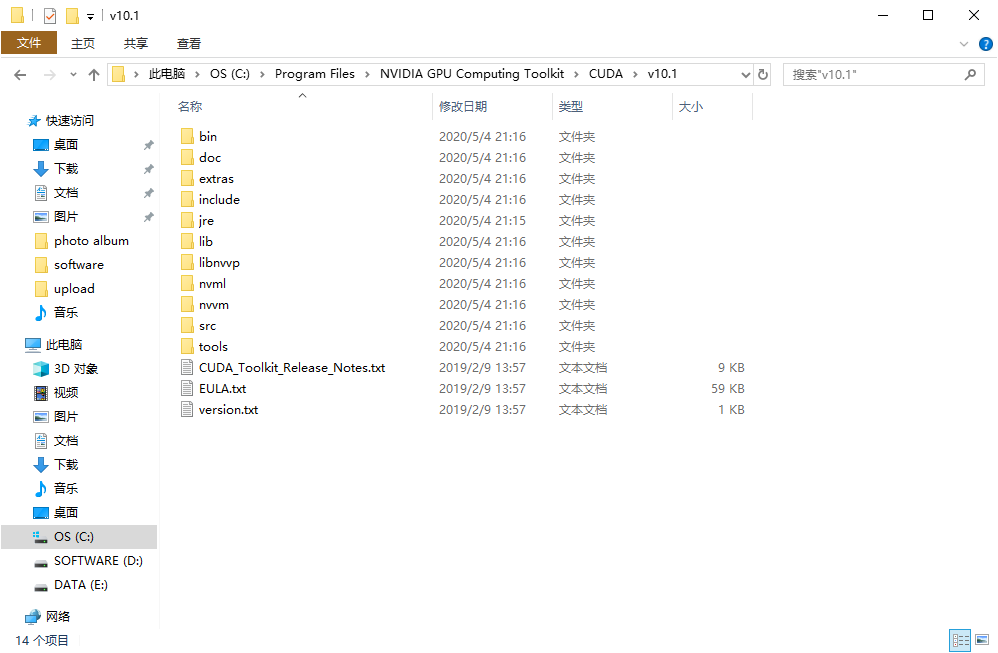

NVIDIA GPU COMPUTING TOOLKIT DRIVER

Fermi cards (CUDA 3.2 until CUDA 8)ĭeprecated from CUDA 9, support completely dropped from CUDA 10.

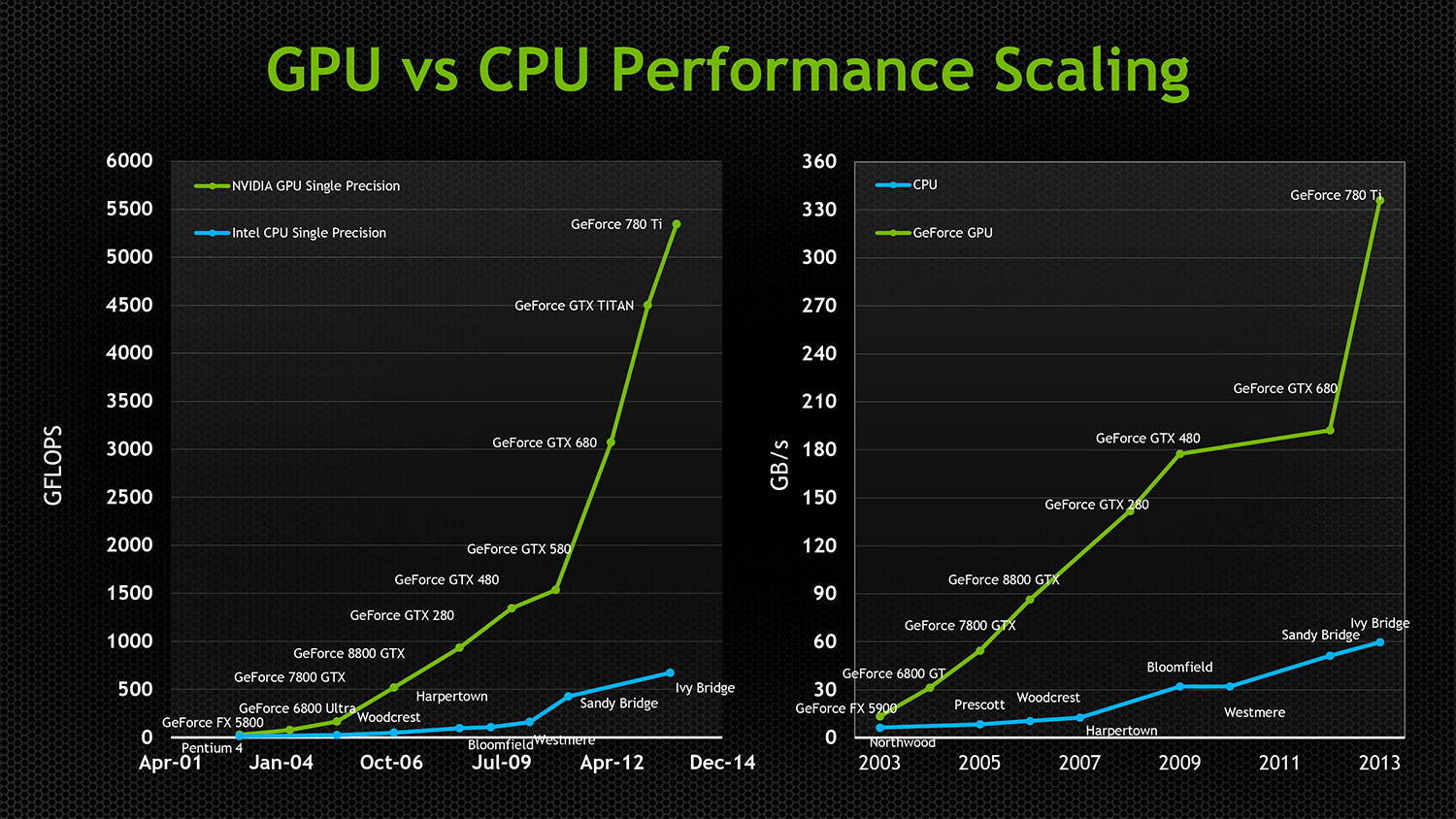

I’ve tried to supply representative NVIDIA GPU cards for each architecture name, and CUDA version. Supported SM and Gencode variationsīelow are the supported sm variations and sample cards from that generation. However, sometimes you may wish to have better CUDA backwards compatibility by adding more comprehensive ‘ -gencode‘ flags.īefore you continue, identify which GPU you have and which CUDA version you have installed first. When you want to speed up CUDA compilation, you want to reduce the amount of irrelevant ‘ -gencode‘ flags.

NVIDIA GPU COMPUTING TOOLKIT CODE

If you only mention ‘ -gencode‘, but omit the ‘ -arch‘ flag, the GPU code generation will occur on the JIT compiler by the CUDA driver. This will enable faster runtime, because code generation will occur during compilation. When you compile CUDA code, you should always compile only one ‘ -arch‘ flag that matches your most used GPU cards. ‡ Maxwell is deprecated from CUDA 11.6 onwards When should different ‘gencodes’ or ‘cuda arch’ be used?

† Fermi and Kepler are deprecated from CUDA 9 and 11 onwards Here’s a list of NVIDIA architecture names, and which compute capabilities they have: Fermi † Gencodes (‘ -gencode‘) allows for more PTX generations and can be repeated many times for different architectures.

When compiling with NVCC, the arch flag (‘ -arch‘) specifies the name of the NVIDIA GPU architecture that the CUDA files will be compiled for. I’ve seen some confusion regarding NVIDIA’s nvcc sm flags and what they’re used for:

0 kommentar(er)

0 kommentar(er)